Contents

HPE Smart Array P824i-p MR Gen10.....................................................5

Features................................................................................................... 6

Controller supported features....................................................................................................... 6

Operating environments.....................................................................................................6

RAID technologies..............................................................................................................6

Transformation................................................................................................................... 7

Drive technology.................................................................................................................7

Security.............................................................................................................................. 7

Reliability............................................................................................................................7

Performance.......................................................................................................................7

RAID technologies........................................................................................................................ 8

Selecting the right RAID type for your IT infrastructure......................................................8

Mixed mode (RAID and JBOD simultaneously)............................................................... 12

Make Unconfigured Good and Make JBOD.....................................................................12

Patrol read........................................................................................................................12

Striping............................................................................................................................. 12

Mirroring........................................................................................................................... 13

Parity................................................................................................................................ 15

Spare drives..................................................................................................................... 19

Drive rebuild..................................................................................................................... 20

Foreign configuration import............................................................................................ 20

Transformation............................................................................................................................ 20

Array transformations.......................................................................................................20

Logical drive transformations........................................................................................... 20

Drive technology......................................................................................................................... 21

HPE SmartDrive LED.......................................................................................................21

Consistency check........................................................................................................... 23

Online drive firmware update........................................................................................... 23

Discarding pinned cache..................................................................................................23

Dynamic sector repair...................................................................................................... 23

Security....................................................................................................................................... 24

Drive erase.......................................................................................................................24

Sanitize erase...................................................................................................................24

Reliability.....................................................................................................................................25

Recovery ROM.................................................................................................................25

Cache Error Checking and Correction (ECC).................................................................. 25

Thermal monitoring.......................................................................................................... 25

Performance................................................................................................................................25

SAS storage link speed....................................................................................................25

HPE Smart Array MR FastPath........................................................................................25

HPE Smart Array MR CacheCade................................................................................... 26

Cache...............................................................................................................................26

Installation............................................................................................. 29

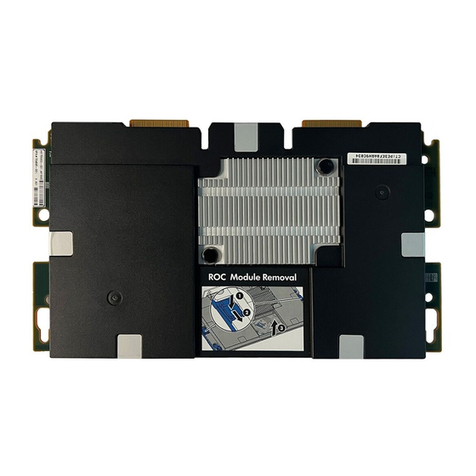

Installation...................................................................................................................................29

Installing an HPE Smart Array P824i-p MR Gen10 controller in a configured server...... 29

3