Cray ClusterStor H-6167 User manual

ClusterStor™ MMU/AMMU Addition

(3.3)

H-6167

Contents

About ClusterStor™ MMU and AMMU Addition......................................................................................................... 3

Add an MMU or AMMU to a ClusterStor™ L300 and L300N System........................................................................ 5

MMU/AMMU Addition Process........................................................................................................................ 8

Prerequisites for MMU/AMMU Addition...........................................................................................................8

Cable MMU/AMMU Hardware to the Storage System.................................................................................. 11

Unmount Lustre and Auto-Discovery.............................................................................................................13

Verify MDS Node Discovery.......................................................................................................................... 17

Complete the MMU/AMMU Addition Procedure............................................................................................ 21

Sample Output for configure_mds........................................................................................................... 27

Tips and Tricks.........................................................................................................................................................32

Failed Recovery or MMU/AMMU Added Out of Sequence........................................................................... 32

Node Stuck in Discovery Prompt...................................................................................................................33

Reconnect a Screen Session........................................................................................................................ 34

Check Puppet Certificate...............................................................................................................................35

SSH Connection Refused / Puppet Certificates............................................................................................ 35

SSH Connection Refused-- MDS/OSS Node Not Fully Booted.................................................................... 35

No Free Arrays / Wrong md Assignment.......................................................................................................36

Check ARP, Local Hosts, and DHCP.............................................................................................................37

Post Upgrade Check..................................................................................................................................... 37

Test Mount Lustre File System on Node 001.................................................................................................37

Contents

H-6167 2

About ClusterStor™ MMU and AMMU Addition

ClusterStor™ MMU and AMMU Addition (3.3) H-6167 includes instructions for adding additional Metadata

Management Units (MMUs) or Advanced Metadata Management Units (AMMUs) to a storage system in the field.

MMUs and AMMUs allow the system to take advantage of the Lustre Distributed Namespace (DNE) feature

introduced with Lustre 2.5. An AMMU is intended for systems that have a requirement for a high single-directory

file create and metadata operation rate.

MMUs are supported for ClusterStor L300 and L300N systems running releases 2.1.0, 3.0.0, 3.1, 3.2, and 3.3.

AMMUs are only supported for L300 and L300N systems running releases 3.0.0, 3.1, 3.2, and 3.3. Only one (1)

AMMU, located in the base rack, is supported in release 3.0.0. Beginning with release 3.1, systems support up to

two (2) AMMUs (one [1] in the base rack).

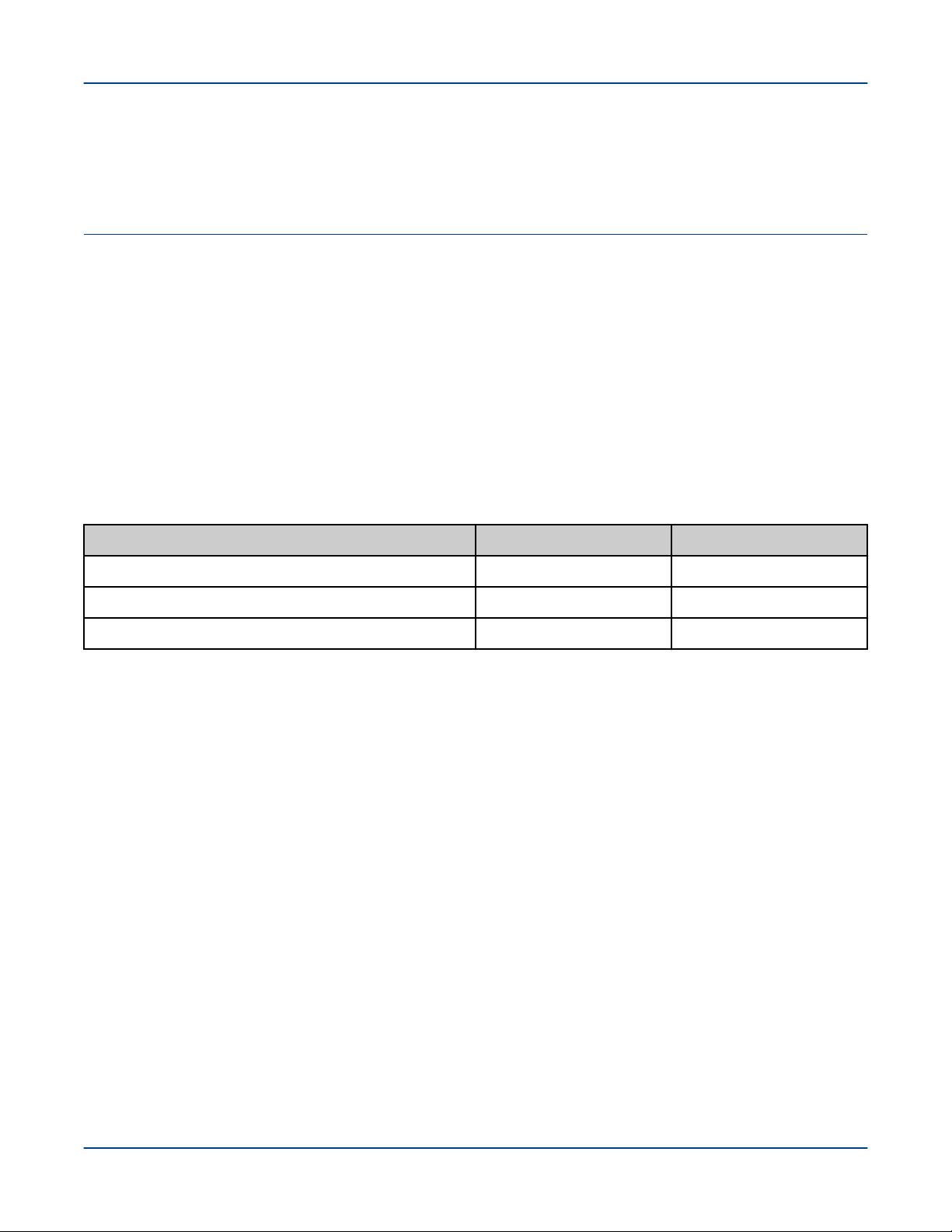

Record of Revision: Publication H-6167

Table 1. Record of Revision

Publication Title Date Updates

ClusterStor MMU/AMMU Addition (3.3) H-6167 December 2019 Release of 3.3 version

ClusterStor MMU/AMMU Addition (3.2) H-6167 August 2019 Release of 3.2 version

ClusterStor MMU/AMMU Addition (3.1) H-6167 February 2019 Release of 3.1 version

Scope and Audience

The procedure in this publication is intended to be performed only by qualified Cray personnel.

Typographic Conventions

Monospace A Monospace font indicates program code, reserved words or library functions,

screen output, file names, path names, and other software constructs

Monospaced Bold A bold monospace font indicates commands that must be entered on a command

line.

Oblique or Italics An oblique or italics font indicates user-supplied values for options in the

syntax definitions

Proportional Bold A proportional bold font indicates a user interface control, window name, or

graphical user interface button or control.

\ (backslash) At the end of a command line, indicates the Linux® shell line continuation character

(lines joined by a backslash are parsed as a single line).

About ClusterStor™ MMU and AMMU Addition

H-6167 3

Other Conventions

Sample commands and command output used throughout this publication are shown with a generic filesystem

name of cls12345.

Trademarks

© 2019, Cray Inc. All rights reserved. All trademarks used in this document are the property of their respective

owners.

About ClusterStor™ MMU and AMMU Addition

H-6167 4

Add an MMU or AMMU to a ClusterStor™ L300 and L300N

System

The topics to follow describe the process to add additional Metadata Management Units (MMUs) or Advanced

Metadata Management Units (AMMUs) to a storage system in the field. MMUs and AMMUs allow the system to

take advantage of the Lustre Distributed Namespace (DNE) feature introduced with Lustre 2.5.

MMUs are supported for ClusterStor L300 and L300N systems running releases 2.1.0, 3.0.0, 3.1, 3.2, and 3.3.

AMMUs are only supported for L300 and L300N systems running releases 3.0.0, 3.1, 3.2, and 3.3. Only one (1)

AMMU, located in the base rack, is supported in release 3.0.0. Beginning with release 3.1, systems support up to

two (2) AMMUs (one [1] in the base rack).

CAUTION: This procedure is intended to be performed only by qualified Cray personnel.

Lustre 2.5 and later releases support Phase 1 of the Lustre DNE feature, which allows multiple MDTs, operating

through multiple MDS nodes, to be configured and to operate as part of a single file system. This feature allows

the number of metadata operations per second within a cluster to scale beyond the capabilities of a standard

storage system's single MDS. To achieve this capability requires that the file system namespace be configured

manually so that file system operations are evenly distributed across the MDS/MDT resources.

The function of the standard MDS node in the MMU or AMMU does not change when additional MMUs and

AMMUs are added. Additional MDS servers are added to the system via the procedures in this publication.

A system running one of the supported software releases supports up to eight (8) total MMUs. One MMU must be

installed in the base rack. Up to seven (7) additional MMUs can be installed into the storage racks, with no more

than one (1) MMU per storage rack. Each MMU is connected to the Local Management Network (LMN) and the

Local Data Network (LDN), as illustrated in the following figure.

Add an MMU or AMMU to a ClusterStor™ L300 and L300N System

H-6167 5

Table of contents

Other Cray Industrial Equipment manuals