NetApp HCI User manual

NetApp HCI configuration and management

documentation

HCI

NetApp

March 06, 2021

This PDF was generated from https://docs.netapp.com/us-en/hci/docs/index.html on March 06, 2021.

Always check docs.netapp.com for the latest.

Table of Contents

NetApp HCI configuration and management documentation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê1

Discover what’s new. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê1

Get started with NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê1

Release Notes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê2

What’s new in NetApp HCI. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê2

Additional release information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê4

Concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê6

NetApp HCI product overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê6

User accounts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê7

Data protection. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê9

Clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê12

Nodes. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê15

Storage. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê16

NetApp HCI licensing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê19

NetApp Hybrid Cloud Control configuration maximums. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê19

NetApp HCI security. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê20

Performance and Quality of Service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê22

Requirements and pre-deployment tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê25

Requirements for NetApp HCI deployment overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê25

Network port requirements. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê25

Network and switch requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê30

Network cable requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê31

IP address requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê32

Network configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê33

DNS and timekeeping requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê41

Environmental requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê42

Protection domains . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê42

Witness Node resource requirements for two-node storage clusters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê42

Get started with NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê44

NetApp HCI installation and deployment overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê44

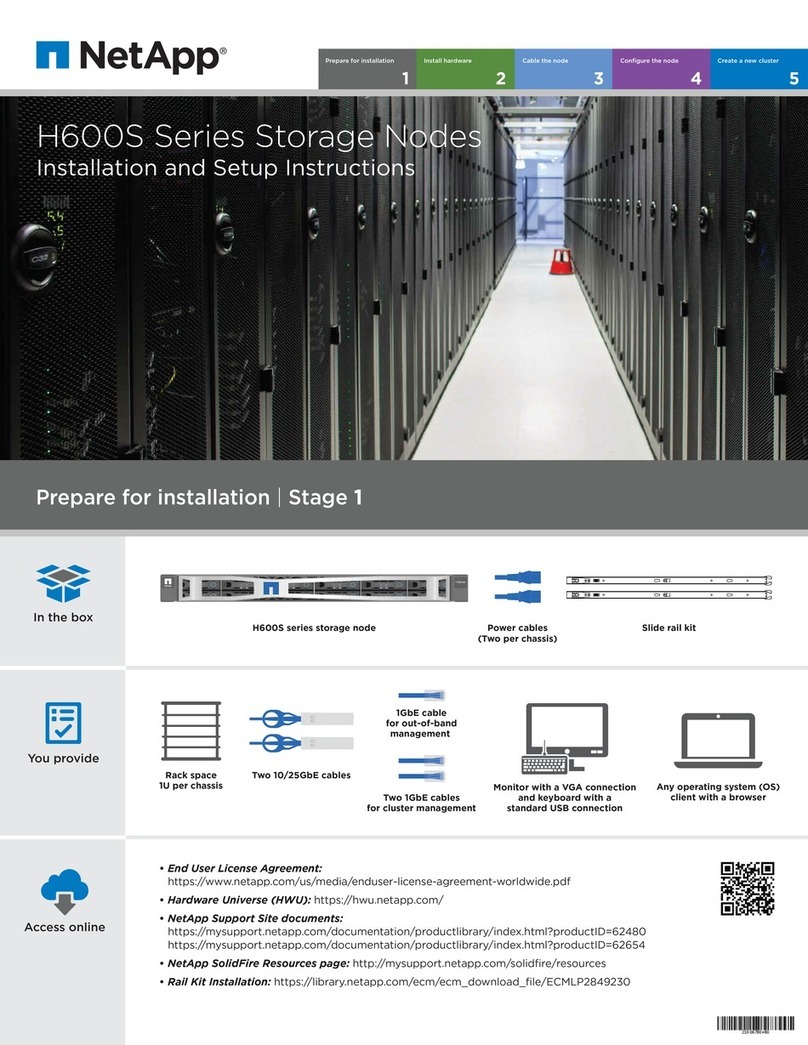

Install H-series hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê50

Configure LACP for optimal storage performance . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê64

Validate your environment with Active IQ Config Advisor. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê64

Configure IPMI for each node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê67

Deploy NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê70

Access the NetApp Deployment Engine . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê70

Start your deployment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê73

Configure VMware vSphere . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê73

Configuring NetApp HCI credentials . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê75

Select a network topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê76

Inventory selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê77

Configure network settings. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê79

Review and deploy the configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê82

Post-deployment tasks. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê83

Manage NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê92

NetApp HCI management overview. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê92

Update vCenter and ESXi credentials . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê92

Manage NetApp HCI storage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê94

Work with the management node. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê114

Power your NetApp HCI system off or on . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê146

Monitor your NetApp HCI system with NetApp Hybrid Cloud Control . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê150

Monitor storage and compute resources on the Hybrid Cloud Control Dashboard . . . . . . . . . . . . . . . . . . . Ê150

View your inventory on the Nodes page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê156

Edit Baseboard Management Controller connection information. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê158

Monitor volumes on your storage cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê161

Monitor performance, capacity, and cluster health with SolidFire Active IQ. . . . . . . . . . . . . . . . . . . . . . . . . Ê163

Collect logs for troubleshooting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê164

Upgrade your NetApp HCI or SolidFire system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê168

Upgrades overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê168

Upgrade sequences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê168

System upgrade procedures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê171

vSphere upgrade sequences with vCenter Plug-in . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê252

Expand your NetApp HCI system. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê254

Expansion overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê254

Expand NetApp HCI storage resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê254

Expand NetApp HCI compute resources . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê256

Expand NetApp HCI storage and compute resources at the same time . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê259

Remove Witness Nodes after expanding cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê262

Use Rancher on NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê264

Rancher on NetApp HCI overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê264

Rancher on NetApp HCI concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê266

Requirements for Rancher on NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê267

Deploy Rancher on NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê269

Post deployment tasks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê274

Deploy user clusters and applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê279

Manage Rancher on NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê280

Monitor a Rancher on NetApp HCI implementation. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê280

Upgrade Rancher on NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê282

Remove a Rancher installation on NetApp HCI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê288

Maintain H-series hardware . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê290

H-series hardware maintenance overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê290

Replace 2U H-series chassis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê290

Replace DC power supply units in H615C and H610S nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê297

Replace DIMMs in compute nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê299

Replace drives for storage nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê308

Replace H410C nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê313

Replace H410S nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê332

Replace H610C and H615C nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê339

Replace H610S nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê345

Replace power supply units . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê347

Replace SN2010, SN2100, and SN2700 switches . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê349

Replace storage node in a two-node cluster . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê357

Legal notices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê358

Copyright . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê358

Trademarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê358

Patents . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê358

Privacy policy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê358

Open source. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . Ê358

NetApp HCI configuration and management

documentation

NetApp HCI provides both storage and compute resources, combining them to build a

VMware vSphere environment backed by the capabilities of NetApp Element software.

You can upgrade, expand, and monitor your system with the NetApp Hybrid Cloud Control interface and

manage NetApp HCI resources with NetApp Element Plug-in for vCenter Server.

Discover what’s new

•What’s new in NetApp HCI

•What’s new in NetApp Element software

•What’s new in management services for Element software and NetApp HCI

•What’s new in NetApp Element Plug-in for vCenter Server

Get started with NetApp HCI

•NetApp HCI installation and deployment overview

•Review basic concepts

Find more information

•NetApp Element Plug-in for vCenter Server

•NetApp HCI Resources page

Release Notes

What’s new in NetApp HCI

NetApp periodically updates NetApp HCI to bring you new features, enhancements, and

bug fixes. NetApp HCI 1.8P1 includes Element 12.2 for storage clusters.

•The NetApp HCI 1.8P1 section describes new features and updates in NetApp HCI version 1.8P1.

•The Element 12.2 section describes new features and updates in NetApp Element 12.2.

NetApp HCI 1.8P1

NetApp HCI 1.8P1 includes security and stability improvements.

NetApp HCI documentation enhancements

You can now access NetApp HCI upgrading, expansion, monitoring, and concepts information in an easy-to-

navigate format here.

NetApp Element Plug-in for vCenter Server 4.5 availability

The NetApp Element Plug-in for vCenter Server 4.5 is available outside of the management node 12.2 and

NetApp HCI 1.8P1 releases. To upgrade the plug-in, follow the instructions in the NetApp HCI Upgrades

documentation.

NetApp Hybrid Cloud Control enhancements

NetApp Hybrid Cloud Control is enhanced for version 1.8P1. Learn more.

Element 12.2

NetApp HCI 1.8P1 includes Element 12.2 for storage clusters. Element 12.2 introduces SolidFire Enterprise

SDS, software encryption at rest, maintenance mode, enhanced volume access security, Fully Qualified

Domain Name (FQDN) access to UIs, storage node firmware updates, and security updates.

SolidFire Enterprise SDS

Element 12.2 introduces SolidFire Enterprise SDS (eSDS). SolidFire eSDS provides the benefits of SolidFire

scale out technology and NetApp Element software data services on the hardware of your choice that meets

the reference configuration for SolidFire eSDS. Learn more.

The following are new Element API methods related to SolidFire eSDS (Element 12.2 API information for

SolidFire eSDS has more information):

•GetLicenseKey

•SetLicenseKey

Software encryption at rest

Element 12.2 introduces software encryption at rest, which you can enable when you create a storage cluster

(and is enabled by default when you create a SolidFire Enterprise SDS storage cluster). This feature encrypts

all data stored on the SSDs in the storage nodes and causes only a very small (~2%) performance impact on

client IO.

The following are Element API methods related to software encryption at rest (the Element API Reference

Guide has more information):

•CreateCluster

Maintenance mode

Element 12.2 introduces maintenance mode, which enables you to take a storage node offline for maintenance

such as software upgrades or host repairs, while preventing a full sync of all data. If one or more nodes need

maintenance, you can minimize the I/O impact to the rest of the storage cluster by enabling maintenance mode

for those nodes before you begin. You can use maintenance mode with both appliance nodes as well as

SolidFire eSDS nodes.

Enhanced volume access security

You can now restrict volume access to certain initiators based on VLAN (virtual network) association. You can

associate new or existing initiators with one or more virtual networks, restricting that initiator to iSCSI targets

accessible via those virtual networks.

The following are updated Element API methods related to these security improvements (the Element API

Reference Guide has more information):

•CreateInitiators

•ModifyInitiators

•AddAccount

•ModifyAccount

Fully Qualified Domain Name (FQDN) access to UIs

Element 12.2 supports cluster web interface access using FQDNs. On Element 12.2 storage clusters, if you

use the FQDN to access web user interfaces such as the Element web UI, per-node UI, or management node

UI, you must first add a storage cluster setting to identify the FQDN used by the cluster. This setting enables

the cluster to properly redirect a login session and facilitates better integration with external services like key

managers and identity providers for multi-factor authentication. This feature requires management services

version 2.15 or later. Learn more.

Storage node firmware updates

Element 12.2 includes firmware updates for storage nodes. Learn more.

Security enhancements

Element 12.2 resolves security vulnerabilities for storage nodes and the management node. Learn more about

these security enhancements.

New SMART warning for failing drives

Element 12.2 now performs periodic health checks on SolidFire appliance drives using SMART health data

from the drives. A drive that fails the SMART health check might be close to failure. If a drive fails the SMART

health check, a new critical severity cluster fault appears: Drive with serial: <serial number> in

slot: <node slot><drive slot> has failed the SMART overall health check. To

resolve this fault, replace the drive

Find more information

•NetApp Hybrid Cloud Control and Management Services Release Notes

•NetApp Element Plug-in for vCenter Server

•NetApp HCI Resources page

•SolidFire and Element Software Documentation Center

•Firmware and driver versions for NetApp HCI and NetApp Element software

Additional release information

You can find links to the latest and earlier release notes for various components of the

NetApp HCI and Element storage environment.

You will be prompted to log in using your NetApp Support Site credentials.

NetApp HCI

•NetApp HCI 1.8P1 Release Notes

•NetApp HCI 1.8 Release Notes

•NetApp HCI 1.7P1 Release Notes

NetApp Element software

•NetApp Element Software 12.2 Release Notes

•NetApp Element Software 12.0 Release Notes

•NetApp Element Software 11.8 Release Notes

•NetApp Element Software 11.7 Release Notes

•NetApp Element Software 11.5.1 Release Notes

•NetApp Element Software 11.3P1 Release Notes

Management services

•Management Services Release Notes

NetApp Element Plug-in for vCenter Server

•vCenter Plug-in 4.6 Release Notes

•vCenter Plug-in 4.5 Release Notes

•vCenter Plug-in 4.4 Release Notes

•vCenter Plug-in 4.3 Release Notes

Compute firmware

•Compute Firmware Bundle 2.76 Release Notes (latest)

•Compute Firmware Bundle 2.27 Release Notes

•Compute Firmware Bundle 12.2.109 Release Notes

Storage firmware

•Storage firmware Bundle 2.76 Release Notes (latest)

•Storage firmware Bundle 2.27 Release Notes

•H610S BMC 3.84.07 Release Notes

Concepts

NetApp HCI product overview

NetApp HCI is an enterprise-scale hybrid cloud infrastructure design that combines

storage, compute, networking, and hypervisor—and adds capabilities that span public

and private clouds.

NetApp’s disaggregated hybrid cloud infrastructure allows independent scaling of compute and storage,

adapting to workloads with guaranteed performance.

•Meets hybrid multicloud demand

•Scales compute and storage independently

•Simplifies data services orchestration across hybrid multiclouds

Components of NetApp HCI

Here is an overview of the various components of the NetApp HCI environment:

•NetApp HCI provides both storage and compute resources. You use the NetApp Deployment Engine

wizard to deploy NetApp HCI. After successful deployment, compute nodes appear as ESXi hosts and you

can manage them in VMware vSphere Web Client.

•Management services or microservices include the Active IQ collector, QoSSIOC for the vCenter Plug-in,

and mNode service; they are updated frequently as service bundles. As of the Element 11.3 release,

management services are hosted on the management node, allowing for quicker updates of select

software services outside of major releases. The management node (mNode) is a virtual machine that

runs in parallel with one or more Element software-based storage clusters. It is used to upgrade and

provide system services including monitoring and telemetry, manage cluster assets and settings, run

system tests and utilities, and enable NetApp Support access for troubleshooting.

Learn more about management services releases.

•NetApp Hybrid Cloud Control enables you to manage NetApp HCI. You can upgrade management

services, expand your system, collect logs, and monitor your installation by using NetApp SolidFire Active

IQ. You log in to NetApp Hybrid Cloud Control by browsing to the IP address of the management node.

•The NetApp Element Plug-in for vCenter Server (VCP) is a web-based tool integrated with the vSphere

user interface (UI). The plug-in is an extension and scalable, user-friendly interface for VMware vSphere

that can manage and monitor storage clusters running NetApp Element software. The plug-in provides an

alternative to the Element UI. You can use the plug-in user interface to discover and configure clusters, and

to manage, monitor, and allocate storage from cluster capacity to configure datastores and virtual

datastores (for virtual volumes). A cluster appears on the network as a single local group that is

represented to hosts and administrators by virtual IP addresses. You can also monitor cluster activity with

real-time reporting, including error and alert messaging for any event that might occur while performing

various operations.

Learn more about VCP.

•By default, NetApp HCI sends performance and alert statistics to the NetApp SolidFire Active IQ service.

As part of your normal support contract, NetApp Support monitors this data and alerts you to any

performance bottlenecks or potential system issues. You need to create a NetApp Support account if you

do not already have one (even if you have an existing SolidFire Active IQ account) so that you can take

advantage of this service.

Learn more about NetApp SolidFire Active IQ.

NetApp HCI URLs

Here are the common URLs you use with NetApp HCI:

URL Description

https://[IPv4 address of Bond1G

interface on a storage node]

Access the NetApp Deployment Engine wizard to

install and configure NetApp HCI. Learn more.

https://[management node IP address] Access NetApp Hybrid Cloud Control to upgrade,

expand, and monitor your NetApp HCI installation,

and update management services. Learn more.

https://[IP address]:442 From the per-node UI, access network and cluster

settings and utilize system tests and utilities. Learn

more.

https://[management node IP

address]:9443

Register the vCenter Plug-in package in the vSphere

Web Client.

https://activeiq.solidfire.com Monitor data and receive alerts to any performance

bottlenecks or potential system issues.

https://[management node IP

address]/mnode

Manually update management services using the

REST API UI from the management node.

https://[storage cluster MVIP address] Access the NetApp Element software UI.

Find more information

•NetApp Element Plug-in for vCenter Server

•NetApp HCI Resources page

User accounts

To access storage resources on your system, you’ll need to set up user accounts.

User account management

User accounts are used to control access to the storage resources on a NetApp Element software-based

network. At least one user account is required before a volume can be created.

When you create a volume, it is assigned to an account. If you have created a virtual volume, the account is

the storage container.

Here are some additional considerations:

•The account contains the CHAP authentication required to access the volumes assigned to it.

•An account can have up to 2000 volumes assigned to it, but a volume can belong to only one account.

•User accounts can be managed from the NetApp Element Management extension point.

Using NetApp Hybrid Cloud Control, you can create and manage the following types of accounts:

•Administrator user accounts for the storage cluster

•Authoritative user accounts

•Volume accounts, specific only to the storage cluster on which they were created.

Storage cluster administrator accounts

There are two types of administrator accounts that can exist in a storage cluster running NetApp Element

software:

•Primary cluster administrator account: This administrator account is created when the cluster is created.

This account is the primary administrative account with the highest level of access to the cluster. This

account is analogous to a root user in a Linux system. You can change the password for this administrator

account.

•Cluster administrator account: You can give a cluster administrator account a limited range of

administrative access to perform specific tasks within a cluster. The credentials assigned to each cluster

administrator account are used to authenticate API and Element UI requests within the storage system.

A local (non-LDAP) cluster administrator account is required to access active nodes in a

cluster via the per-node UI. Account credentials are not required to access a node that is not

yet part of a cluster.

You can manage cluster administrator accounts by creating, deleting, and editing cluster administrator

accounts, changing the cluster administrator password, and configuring LDAP settings to manage system

access for users.

For details, see the SolidFire and Element Documentation Center.

Authoritative user accounts

Authoritative user accounts can authenticate against any storage asset associated with the NetApp Hybrid

Cloud Control instance of nodes and clusters. With this account, you can manage volumes, accounts, access

groups, and more across all clusters.

Authoritative user accounts are managed from the top right menu User Management option in NetApp Hybrid

Cloud Control.

The authoritative storage cluster is the storage cluster that NetApp Hybrid Cloud Control uses to authenticate

users.

All users created on the authoritative storage cluster can log into the NetApp Hybrid Cloud Control. Users

created on other storage clusters cannot log into Hybrid Cloud Control.

•If your management node only has one storage cluster, then it is the authoritative cluster.

•If your management node has two or more storage clusters, one of those clusters is assigned as the

authoritative cluster and only users from that cluster can log into NetApp Hybrid Cloud Control.

While many NetApp Hybrid Cloud Control features work with multiple storage clusters, authentication and

authorization have necessary limitations. The limitation around authentication and authorization is that users

from the authoritative cluster can execute actions on other clusters tied to NetApp Hybrid Cloud Control even if

they are not a user on the other storage clusters. Before proceeding with managing multiple storage clusters,

you should ensure that users defined on the authoritative clusters are defined on all other storage clusters with

the same permissions. You can manage users from NetApp Hybrid Cloud Control.

Volume accounts

Volume-specific accounts are specific only to the storage cluster on which they were created. These accounts

enable you to set permissions on specific volumes across the network, but have no effect outside of those

volumes.

Volume accounts are managed within the NetApp Hybrid Cloud Control Volumes table.

Find more information

•Manage user accounts

•Learn about clusters

•NetApp HCI Resources page

•NetApp Element Plug-in for vCenter Server

•SolidFire and Element Documentation Center

Data protection

NetApp HCI data protection terms include different types of remote replication, volume

snapshots, volume cloning, protection domains, and high availability with double Helix

technology.

NetApp HCI data protection includes the following concepts:

•Remote replication types

•Volume snapshots for data protection

•Volume clones

•Backup and restore process overview for SolidFire storage

•Protection domains

•Double Helix high availability

Remote replication types

Remote replication of data can take the following forms:

•Synchronous and asynchronous replication between clusters

•Snapshot-only replication

•Replication between Element and ONTAP clusters using SnapMirror

See TR-4741: NetApp Element Software Remote Replication.

Synchronous and asynchronous replication between clusters

For clusters running NetApp Element software, real-time replication enables the quick creation of remote

copies of volume data.

You can pair a storage cluster with up to four other storage clusters. You can replicate volume data

synchronously or asynchronously from either cluster in a cluster pair for failover and failback scenarios.

Synchronous replication

Synchronous replication continuously replicates data from the source cluster to the target cluster and is

affected by latency, packet loss, jitter, and bandwidth.

Synchronous replication is appropriate for the following situations:

•Replication of several systems over a short distance

•A disaster recovery site that is geographically local to the source

•Time-sensitive applications and the protection of databases

•Business continuity applications that require the secondary site to act as the primary site when the primary

site is down

Asynchronous replication

Asynchronous replication continuously replicates data from a source cluster to a target cluster without waiting

for the acknowledgments from the target cluster. During asynchronous replication, writes are acknowledged to

the client (application) after they are committed on the source cluster.

Asynchronous replication is appropriate for the following situations:

•The disaster recovery site is far from the source and the application does not tolerate latencies induced by

the network.

•There are bandwidth limitations on the network connecting the source and target clusters.

Snapshot-only replication

Snapshot-only data protection replicates changed data at specific points of time to a remote cluster. Only those

snapshots that are created on the source cluster are replicated. Active writes from the source volume are not.

You can set the frequency of the snapshot replications.

Snapshot replication does not affect asynchronous or synchronous replication.

Replication between Element and ONTAP clusters using SnapMirror

With NetApp SnapMirror technology, you can replicate snapshots that were taken using NetApp Element

software to ONTAP for disaster recovery purposes. In a SnapMirror relationship, Element is one endpoint and

ONTAP is the other.

SnapMirror is a NetApp Snapshot™ replication technology that facilitates disaster recovery, designed for

failover from primary storage to secondary storage at a geographically remote site. SnapMirror technology

creates a replica, or mirror, of the working data in secondary storage from which you can continue to serve

data if an outage occurs at the primary site. Data is mirrored at the volume level.

The relationship between the source volume in primary storage and the destination volume in secondary

storage is called a data protection relationship. The clusters are referred to as endpoints in which the volumes

reside and the volumes that contain the replicated data must be peered. A peer relationship enables clusters

and volumes to exchange data securely.

SnapMirror runs natively on the NetApp ONTAP controllers and is integrated into Element, which runs on

NetApp HCI and SolidFire clusters. The logic to control SnapMirror resides in ONTAP software; therefore, all

SnapMirror relationships must involve at least one ONTAP system to perform the coordination work. Users

manage relationships between Element and ONTAP clusters primarily through the Element UI; however, some

management tasks reside in NetApp ONTAP System Manager. Users can also manage SnapMirror through

the CLI and API, which are both available in ONTAP and Element.

See TR-4651: NetApp SolidFire SnapMirror Architecture and Configuration (login required).

You must manually enable SnapMirror functionality at the cluster level by using Element software. SnapMirror

functionality is disabled by default, and it is not automatically enabled as part of a new installation or upgrade.

After enabling SnapMirror, you can create SnapMirror relationships from the Data Protection tab in the Element

software.

Volume snapshots for data protection

A volume snapshot is a point-in-time copy of a volume that you could later use to restore a volume to that

specific time.

While snapshots are similar to volume clones, snapshots are simply replicas of volume metadata, so you

cannot mount or write to them. Creating a volume snapshot also takes only a small amount of system

resources and space, which makes snapshot creation faster than cloning.

You can replicate snapshots to a remote cluster and use them as a backup copy of the volume. This enables

you to roll back a volume to a specific point in time by using the replicated snapshot; you can also create a

clone of a volume from a replicated snapshot.

You can back up snapshots from a SolidFire cluster to an external object store, or to another SolidFire cluster.

When you back up a snapshot to an external object store, you must have a connection to the object store that

allows read/write operations.

You can take a snapshot of an individual volume or multiple for data protection.

Volume clones

A clone of a single volume or multiple volumes is point-in-time copy of the data. When you clone a volume, the

system creates a snapshot of the volume and then creates a copy of the data referenced by the snapshot.

This is an asynchronous process, and the amount of time the process requires depends on the size of the

volume you are cloning and the current cluster load.

The cluster supports up to two running clone requests per volume at a time and up to eight active volume clone

operations at a time. Requests beyond these limits are queued for later processing.

Backup and restore process overview for SolidFire storage

You can back up and restore volumes to other SolidFire storage, as well as to secondary object stores that are

compatible with Amazon S3 or OpenStack Swift.

You can back up a volume to the following:

•A SolidFire storage cluster

•An Amazon S3 object store

•An OpenStack Swift object store

When you restore volumes from OpenStack Swift or Amazon S3, you need manifest information from the

original backup process. If you are restoring a volume that was backed up on a SolidFire storage system, no

manifest information is required.

Protection domains

A protection domain is a node or a set of nodes grouped together such that any part or even all of it might fail,

while maintaining data availability. Protection domains enable a storage cluster to heal automatically from the

loss of a chassis (chassis affinity) or an entire domain (group of chassis).

A protection domain layout assigns each node to a specific protection domain.

Two different protection domain layouts, called protection domain levels, are supported.

•At the node level, each node is in its own protection domain.

•At the chassis level, only nodes that share a chassis are in the same protection domain.

◦The chassis level layout is automatically determined from the hardware when the node is added to the

cluster.

◦In a cluster where each node is in a separate chassis, these two levels are functionally identical.

You can manually enable protection domain monitoring using the NetApp Element Configuration extension

point in the NetApp Element Plug-in for vCenter Server. You can select a protection domain threshold based on

node or chassis domains.

When creating a new cluster, if you are using storage nodes that reside in a shared chassis, you might want to

consider designing for chassis-level failure protection using the protection domains feature.

You can define a custom protection domain layout, where each node is associated with one and only one

custom protection domain. By default, each node is assigned to the same default custom protection domain.

See SolidFire and Element 12.2 Documentation Center.

Double Helix high availability

Double Helix data protection is a replication method that spreads at least two redundant copies of data across

all drives within a system. The “RAID-less” approach enables a system to absorb multiple, concurrent failures

across all levels of the storage system and repair quickly.

Find more information

•NetApp HCI Resources page

•NetApp Element Plug-in for vCenter Server

Clusters

A cluster is a group of nodes, functioning as a collective whole, that provide storage or

compute resources. Starting with NetApp HCI 1.8, you can have a storage cluster with

two nodes. A storage cluster appears on the network as a single logical group and can

then be accessed as block storage.

The storage layer in NetApp HCI is provided by NetApp Element software and the management layer is

provided by the NetApp Element Plug-in for vCenter Server. A storage node is a server containing a collection

of drives that communicate with each other through the Bond10G network interface. Each storage node is

connected to two networks, storage and management, each with two independent links for redundancy and

performance. Each node requires an IP address on each network. You can create a cluster with new storage

nodes, or add storage nodes to an existing cluster to increase storage capacity and performance.

Authoritative storage clusters

The authoritative storage cluster is the storage cluster that NetApp Hybrid Cloud Control uses to authenticate

users.

If your management node only has one storage cluster, then it is the authoritative cluster. If your management

node has two or more storage clusters, one of those clusters is assigned as the authoritative cluster and only

users from that cluster can log into NetApp Hybrid Cloud Control. To find out which cluster is the authoritative

cluster, you can use the GET /mnode/about API. In the response, the IP address in the token_url field is

the management virtual IP address (MVIP) of the authoritative storage cluster. If you attempt to log into NetApp

Hybrid Cloud Control as a user that is not on the authoritative cluster, the login attempt will fail.

Many NetApp Hybrid Cloud Control features are designed to work with multiple storage clusters, but

authentication and authorization have limitations. The limitation around authentication and authorization is that

the user from the authoritative cluster can execute actions on other clusters tied to NetApp Hybrid Cloud

Control even if they are not a user on the other storage clusters. Before proceeding with managing multiple

storage clusters, you should ensure that users defined on the authoritative clusters are defined on all other

storage clusters with the same permissions.

You can manage users with NetApp Hybrid Cloud Control.

Before proceeding with managing multiple storage clusters, you should ensure that users defined on the

authoritative clusters are defined on all other storage clusters with the same permissions. You can manage

users from the Element software user interface (Element web UI).

See Create and manage storage cluster assets for more information on working with management node

storage cluster assets.

Stranded capacity

If a newly added node accounts for more than 50 percent of the total cluster capacity, some of the capacity of

this node is made unusable ("stranded"), so that it complies with the capacity rule. This remains the case until

more storage capacity is added. If a very large node is added that also disobeys the capacity rule, the

previously stranded node will no longer be stranded, while the newly added node becomes stranded. Capacity

should always be added in pairs to avoid this from happening. When a node becomes stranded, an appropriate

cluster fault is thrown.

Two-node storage clusters

Starting with NetApp HCI 1.8, you can set up a storage cluster with two storage nodes.

•You can use certain types of nodes to form the two-node storage cluster. See NetApp HCI 1.8 Release

Notes.

The storage nodes in a two-node cluster must be the same model type.

•Two-node storage clusters are best suited for small-scale deployments with workloads that are not

dependent on large capacity and high performance requirements.

•In addition to two storage nodes, a two-node storage cluster also includes two NetApp HCI Witness

Nodes.

Learn more about Witness Nodes.

•You can scale a two-node storage cluster to a three-node storage cluster. Three-node clusters increase

resiliency by providing the ability to auto-heal from storage node failures.

•Two-node storage clusters provide the same security features and functionality as the traditional four-node

storage clusters.

•Two-node storage clusters use the same networks as four-node storage clusters. The networks are set up

during NetApp HCI deployment using the NetApp Deployment Engine wizard.

Storage cluster quorum

Element software creates a storage cluster from selected nodes, which maintains a replicated database of the

cluster configuration. A minimum of three nodes are required to participate in the cluster ensemble to maintain

quorum for cluster resiliency. Witness Nodes in a two-node cluster are used to ensure that there are enough

storage nodes to form a valid ensemble quorum. For ensemble creation, storage nodes are preferred over

Witness Nodes. For the minimum three-node ensemble involving a two-node storage cluster, two storage

nodes and one Witness Node are used.

In a three-node ensemble with two storage nodes and one Witness Node, if one storage

node goes offline, the cluster goes into a degraded state. Of the two Witness Nodes, only

one can be active in the ensemble. The second Witness Node cannot be added to the

ensemble, because it performs the backup role. The cluster stays in degraded state until the

offline storage node returns to an online state, or a replacement node joins the cluster.

If a Witness Node fails, the remaining Witness Node joins the ensemble to form a three-node ensemble. You

can deploy a new Witness Node to replace the failed Witness Node.

Auto-healing and failure handling in two-node storage clusters

If a hardware component fails in a node that is part of a traditional cluster, the cluster can rebalance data that

was on the component that failed to other available nodes in the cluster. This ability to automatically heal is not

available in a two-node storage cluster, because a minimum of three physical storage nodes must be available

to the cluster for healing automatically. When one node in a two-node cluster fails, the two-node cluster does

not require regeneration of a second copy of data. New writes are replicated for block data in the remaining

active storage node. When the failed node is replaced and joins the cluster, the data is rebalanced between the

two physical storage nodes.

Storage clusters with three or more nodes

Expanding from two storage nodes to three storage nodes makes your cluster more resilient by allowing auto-

healing in the event of node and drive failures, but does not provide additional capacity. You can expand using

the NetApp Hybrid Cloud Control UI. When expanding from a two-node cluster to a three-node cluster, capacity

can be stranded (see Stranded capacity). The UI wizard shows warnings about stranded capacity before

installation. A single Witness Node is still available to keep the ensemble quorum in the event of a storage

node failure, with a second Witness Node on standby.

When you expand a three-node storage cluster to a four-node cluster, capacity and performance are

increased. In a four-node cluster, Witness Nodes are no longer needed to form the cluster quorum.

You can expand to up to 64 compute nodes and 40 storage nodes.

Find more information

•NetApp HCI Two-Node Storage Cluster | TR-4823

•NetApp Element Plug-in for vCenter Server

•SolidFire and Element Software Documentation Center

Nodes

Nodes are hardware or virtual resources that are grouped into a cluster to provide block

storage and compute capabilities.

NetApp HCI and Element software defines various node roles for a cluster. The four types of node roles are

management node, storage node, compute node, and NetApp HCI Witness Nodes.

Management node

The management node (sometimes abbreviated as mNode) interacts with a storage cluster to perform

management actions, but is not a member of the storage cluster. Management nodes periodically collect

information about the cluster through API calls and report this information to Active IQ for remote monitoring (if

enabled). Management nodes are also responsible for coordinating software upgrades of the cluster nodes.

The management node is a virtual machine that runs in parallel with one or more Element software-based

storage clusters. In addition to upgrades, it is used to provide system services including monitoring and

telemetry, manage cluster assets and settings, run system tests and utilities, and enable NetApp Support

access for troubleshooting. As of the Element 11.3 release, the management node functions as a microservice

host, allowing for quicker updates of select software services outside of major releases. These microservices

or management services, such as the Active IQ collector, QoSSIOC for the vCenter Plug-in, and management

node service, are updated frequently as service bundles.

Storage nodes

NetApp HCI storage nodes are hardware that provide the storage resources for a NetApp HCI system. Drives

in the node contain block and metadata space for data storage and data management. Each node contains a

factory image of NetApp Element software. NetApp HCI storage nodes can be managed using the NetApp

Element Management extension point.

Compute nodes

NetApp HCI compute nodes are hardware that provides compute resources, such as CPU, memory, and

networking, that are needed for virtualization in the NetApp HCI installation. Because each server runs

VMware ESXi, NetApp HCI compute node management (adding or removing hosts) must be done outside of

the plug-in within the Hosts and Clusters menu in vSphere. Regardless of whether it is a four-node storage

cluster or a two-node storage cluster, the minimum number of compute nodes remains two for a NetApp HCI

deployment.

Witness Nodes

NetApp HCI Witness Nodes are virtual machines that run on compute nodes in parallel with an Element

software-based storage cluster. Witness Nodes do not host slice or block services. A Witness Node enables

storage cluster availability in the event of a storage node failure. You can manage and upgrade Witness Nodes

in the same way as other storage nodes. A storage cluster can have up to four Witness Nodes. Their primary

purpose is to ensure that enough cluster nodes exist to form a valid ensemble quorum.

Learn more about Witness Node resource requirements and Witness Node IP address

requirements.

In a two-node storage cluster, a minimum of two Witness Nodes are deployed for

redundancy in the event of a Witness Node failure. When the NetApp HCI installation

process installs Witness Nodes, a virtual machine template is stored in VMware vCenter that

you can use to redeploy a Witness Node in case it is accidentally removed, lost, or

corrupted. You can also use the template to redeploy a Witness Node if you need to replace

a failed compute node that was hosting the Witness Node. For instructions, see the

Redeploy Witness Nodes for two and three-node storage clusters section here.

Find more information

•NetApp HCI Two-Node Storage Cluster | TR-4823

•NetApp Element Plug-in for vCenter Server

•SolidFire and Element Software Documentation Center

Storage

Maintenance mode

If you need to take a storage node offline for maintenance such as software upgrades or

host repairs, you can minimize the I/O impact to the rest of the storage cluster by

enabling maintenance mode for that node. You can use maintenance mode with both

appliance nodes as well as SolidFire Enterprise SDS nodes.

You can only transition a storage node to maintenance mode if the node is healthy (has no blocking cluster

faults) and the storage cluster is tolerant to a single node failure. Once you enable maintenance mode for a

healthy and tolerant node, the node is not immediately transitioned; it is monitored until the following conditions

are true:

•All volumes hosted on the node have failed over

•The node is no longer hosting as the primary for any volume

•A temporary standby node is assigned for every volume being failed over

After these criteria are met, the node is transitioned to maintenance mode. If these criteria are not met within a

5 minute period, the node will not enter maintenance mode.

When you disable maintenance mode for a storage node, the node is monitored until the following conditions

are true:

Table of contents

Other NetApp Storage manuals

NetApp

NetApp DE6600 User manual

NetApp

NetApp FAS8300 Manual

NetApp

NetApp FAS22 Series User manual

NetApp

NetApp AFF A400 Manual

NetApp

NetApp AFF A320 User manual

NetApp

NetApp E Series User manual

NetApp

NetApp FAS8200 Series Manual

NetApp

NetApp AltaVault AVA400 Instruction Manual

NetApp

NetApp DE6900 User manual

NetApp

NetApp E5700 User manual

NetApp

NetApp EF550 User manual

NetApp

NetApp FAS9500 User manual

NetApp

NetApp DS460C User manual

NetApp

NetApp E Series User manual

NetApp

NetApp FAS2020 Manual

NetApp

NetApp E Series Instructions for use

NetApp

NetApp AFF A800 Instruction Manual

NetApp

NetApp FAS500f User manual

NetApp

NetApp FAS2500 Use and care manual

NetApp

NetApp AFF A900 Manual