Nexmosphere XV User manual

1. General

Nexmosphere's XV HandGesture sensor can track the position of a person's hand and detect gestures such as pointing,

swiping and thumbs up. This functionality can be used to make touchless user interfaces for public spaces. This document

provides explanation of the available functionalities and instructions on how to install and integrate the sensor into your

digital signage installation.

The information in this document is created for users who are familiar with the Nexmosphere API and are able to control a

basic setup with a Nexmosphere API controller. If this is not the case yet, please read the general documentation on the

Nexmosphere serial API rst.

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

PRODUCT MANUAL

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

1

Table of content

1. General 1

2. Product overview 1

3. Functionality and API commands 3

3.1 HandGesture detection 3

3.2 Position tracking 6

3.3 Activation zones 8

3.4Sensorcongurationandinitialization 11

3.5 HDMI output 13

3.6 Focus mode 14

3.7 USB-updates 15

3.8 Monitoring 16

4. Installation requirements and guidelines 17

4.1 Connection diagrams 17

4.2 Hardware integration guidelines 18

4.3 Guidelines for gesture detection 19

5. Settings 21

5.1 Settings: Sensor behaviour 21

5.2 Settings: API output 22

6. Quick test 23

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

XV

HDMI

XV-H40

S-CLA05

Mini-Displayport

Mini-HDMI

USB-C

power supply

CAX-M6W

X-talk cable

2

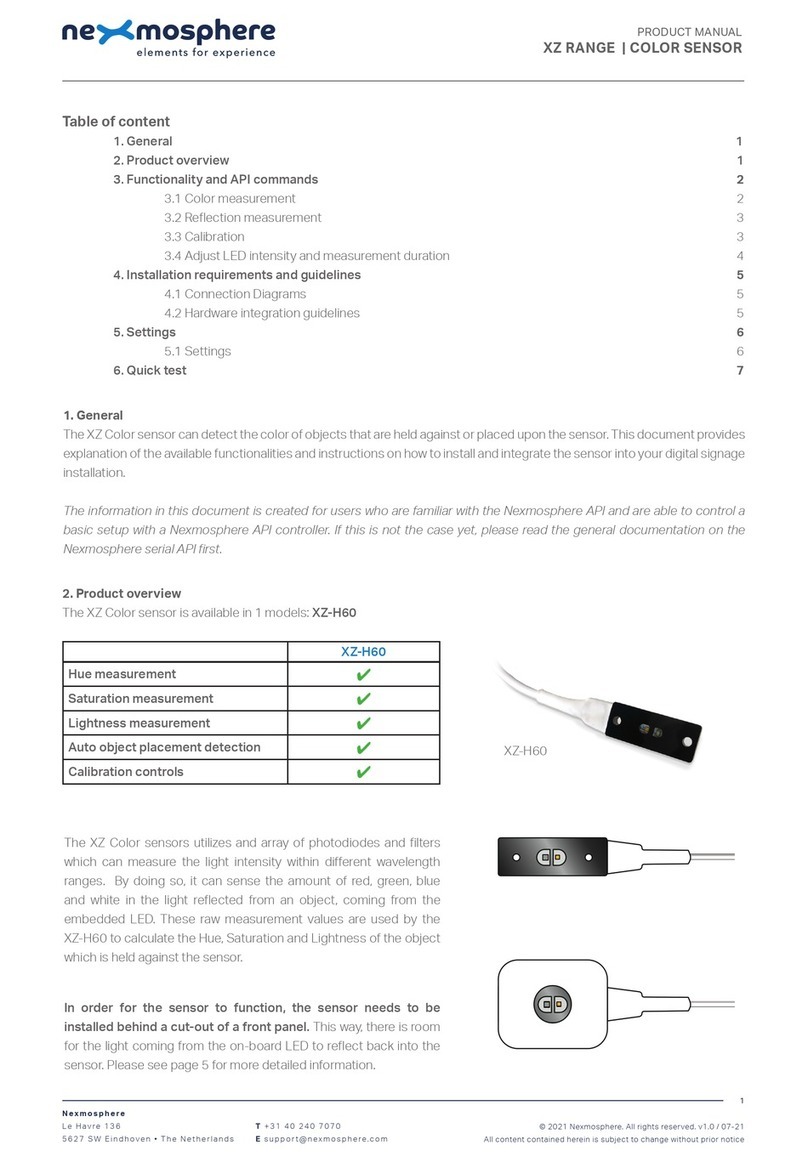

2. Product overview

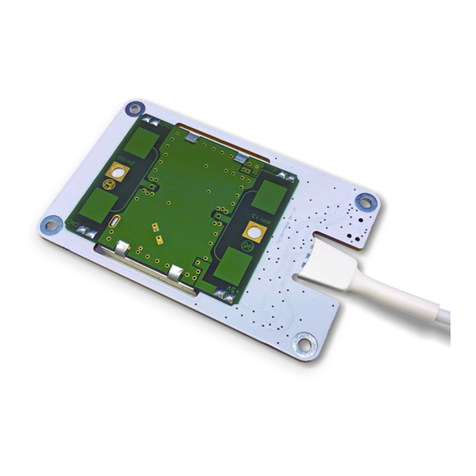

The XV HandGesture sensor consists out of 2 items:

XV-H40 HandGesture sensor S-CLA05 Camera module

To create a functioning HandGesture sensor, the S-CLA05 camera module needs to be connected to the XV-H40 module

with a Mini-Displayport cable (included). The XV-H40 module can then be powered with the provided USB-C power supply.

The XV-H40 is connected to one of Nexmosphere's Xperience controllers using a CAX-M6W X-talk cable.

The XV-H40 sensor analyses the video feed captured by the S-CLA05 camera module utilizing the AI algorithm from Motion

Gestures. This algorithm is embedded into the sensor and does not need to be purchased or licensed separately.

The sensor will detect hand gestures such as pointing, swiping and

thumbs up. Next to this, it can track the position of a person's hand

and send out a trigger when it enters an Activation zone.

XV-H40

HandGesture detection

✔

Position tracking

✔

Activation zone detection

✔

HDMI output

✔

S-CLA05

Max detection distance 180cm

Field of View 90°

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

The XV HandGesture sensor provides the following functionalities:

1. HandGesture detection - detects gestures, direction, orientation (front/back) and left/right hand

2. Position tracking - tracks the position and rotation of a person's hand

3. Activation zones -denecustomizabletriggerzonesandtriggerwhenahandenters

4. Sensor conguration and initialization - adjust the sensor's behavior and output

5. HDMI output - provides a video feed of the camera's FoV and hand tracking

6. Focus mode - helps to optimize the focus of the camera's lens

7. USB updates - to customize several aspects of the sensor's HDMI output

8. Monitoring - monitor the sensor's performance

The following sections will cover each of these functionalities in detail. Please note that for each API example in this

document, X-talk interface address 001 is used (X001). When the sensor is connected to another X-talk channel,

replace the "001" with the applicable X-talk address.

3.1 - HandGesture detection

When a hand gesture is detected, an API message is triggered. Per default, this API message indicates the detected gesture

and the direction of a person's hand. This API message has the following format:

X001B[GEST=OPENPALM:***] *** = direction up, down, left, right, center

X001B[GEST=POINT:***] *** = direction up, down, left, right, center

X001B[GEST=OK:***] *** = direction up, down, left, right, center

X001B[GEST=THUMB:***] *** = direction up, down, left, right, center

X001B[GEST=FIST:***] *** = direction up, down, left, right, center

X001B[GEST=V:***] *** = direction up, down, left, right, center

X001B[GEST=SWIPE:***] *** = direction left, right

X001B[GEST=TAP]

X001B[GEST=GRAB]

X001B[GEST=NOHAND]

X001B[GEST=NOGESTURE] *

3

open palm

thumb

swipe tap grab

point

st

OK

V

Gesture example API message

Point gesture detected with direction right

Swipe gesture detected with direction left

Thumb gesture detected with direction up

Fist gesture detected with direction center

X001B[GEST=POINT:RIGHT]

X001B[GEST=SWIPE:LEFT]

X001B[GEST=THUMB:UP]

X001B[GEST=FIST:CENTER]

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

X001B[GEST=#:@:%:OPENPALM:***]

X001B[GEST=#:@:%:POINT:***]

X001B[GEST=#:@:%:OK:***]

X001B[GEST=#:@:%:THUMB:***]

X001B[GEST=#:@:%:FIST:***]

X001B[GEST=#:@:%:V:***]

X001B[GEST=#:@:%:SWIPE:***]

X001B[GEST=#:@:%:TAP:***]

X001B[GEST=#:@:%:GRAB:***]

X001B[GEST=NOHAND]

X001B[GEST=NOGESTURE]

X001B[ID=#] # = Hand ID 1or 2

X001B[HAND=@] @ = left/right LEFT or RIGHT

X001B[SIDE=%] % = side FRONT or BACK

X001B[GESTURE=^^^] ^^^ = gesture OPENPALM,POINT,OK,THUMB,FIST,V,SWIPE,TAP,GRAB,NOHAND,NOGESTURE

X001B[DIRECTION=***] *** = direction up, down, left, right, centre

Next to detecting a gesture and the direction, the sensor can detect if it is a Left or Right hand and whether the Front or

Back of the hand is facing the sensor. Furthermore, the sensor can assign a Hand ID to the detected hand, which serves

asanidenticationwhentwohandsaredetectedsimultaneously.InordertoreceiveallparametersintheAPImessage,the

following setting must be send:

This API message has the following format:

The output format of the API messages can also be set to send each parameter in an individual command:

When the output mode is set to send all parameters (as in the examples on this page), 5 separate API messages will

be send consecutively in the following format:

When implementing gesture triggers, consider the following:

• Thesensorcanbesettodierentoutputmodeswhichhaveseveralcombinationoftheparametersexplainedabove.

For more information, please see page 22, setting 5.

• Detection can be activated and deactivated for each gesture. For more information, please see page 22 setting 20-28.

• For more detailed information on the gestures itself, please see page 19-20.

X001S[5:9]

X001S[6:2]

Set output mode to Gesture with Direction, Left/Right hand, Front/Back, Hand ID and L/R hand

Set output format of Hand Gesture detection to separate commands for each parameter

4

Gesture example API message

Gesture example API message

Point gesture detected with direction right, Hand ID 1,

Right hand, Front side

Point gesture detected with direction right, Hand ID 1,

Right hand, Front side

Thumb gesture detected with direction up, Hand ID 2,

left hand, Back side

X001B[GEST=1:R:F:GEST=POINT:RIGHT] X001B[2:L:B:GEST=THUMB:UP]

X001B[ID=1]

X001B[HAND=RIGHT]

X001B[SIDE=FRONT]

X001B[GESTURE=POINT]

X001B[DIRECTION=LEFT]

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction up, down, left, right, center

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction up, down, left, right, center

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction up, down, left, right, center

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction up, down, left, right, center

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction up, down, left, right, center

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction up, down, left, right, center

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B, ***= direction left, right

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B

#=Hand ID 1or 2, @= left/right L or R, %= front/back For B

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

5

The currently detected Hand Gesture can also be requested at any time by sending one of the following API command:

Hand Gesture Data requests

Request Gesture only

Request Gesture with Direction, Front/Back and Hand ID

Request Gesture with Direction

Request Gesture with Direction, Front/Back and Left/Right

Request Gesture with Direction and Front/Back

Request Gesture with Direction, Hand ID and Left/Right

Request Gesture with Direction and Hand ID

Request Gesture with Direction, Front/Back, Hand ID and Left/Right

Request Gesture with Direction and Left/Right

WhenrequestingthedetectedgestureforaspecicHandID,theIDnumbercanbeaddedtothecommandwitha#.

The reply is identical to the triggered API messages such as the examples listed on the previous pages.

X001B[GEST1?]

X001B[GEST6?]

X001B[GEST2?]

X001B[GEST7?]

X001B[GEST3?]

X001B[GEST8?]

X001B[GEST4?]

X001B[GEST9?]

X001B[GEST5?]

X001B[GEST2?#1]

Example Hand Gesture Data request

Request the Gesture with Direction of Hand ID 1

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

X001B[#:XXX:YYY:SSS:AAA]

#=Hand ID 1or 2, XXX= X-coordinate 0to 100 (%), YYY= Y-coordinate 0to 100 (%), SSS= size 0to 100 (%), AAA= angle 0to 360 (°)

X001B[ID=#] #=Hand ID 1or 2

X001B[X=XXX] XXX= X-coordinate 0to 100 (%)

X001B[Y=YYY] YYY= Y-coordinate 0to 100 (%)

X001B[S=SSS] SSS= size 0to 100 (%)

X001B[A=AAA] AAA= angle 0to 360 (°)

3.2 - Position tracking

The XV HandGesture sensor can also track the position, size and angle of a person's hand. The feature can be enabled by

sending the following setting command:

This API message has the following format:

The output format of the API commands can also be set to send each parameter in an individual API message:

When the output mode is set to send all parameters (as in the examples on this page), 5 separate API messages will

be send consecutively in the following format:

When implementing position tracking, consider the following:

• The X and Y coordinates are provided in % of the camera's Field of View. The top left of the screen is the origin (0,0).

• The size is indicated in % of the height of the camera's Field of View.

See page 7 for a visual explanation of the X and Y coordinates as well as the hand size.

• Perdefault,thereferencepointfortheXandYcoordinateonthedetectedhandisthetipoftheIndexnger.Thiscanbe

adjusted via a setting command. For more information please see page 21, setting 16.

• Thesensorcanbesettodierentoutputmodeswhichhaveseveralcombinationoftheparametersexplainedabove.

For more information, please see page 22, setting 11.

• The output interval of the sensor can be adjusted as well, for more information please see page 21, setting 12. As the

shortest available interval is 250mS, the sensor is not suitable for high speed position tracking (for example to control a

mousecursor).Forapplicationsinwhichaspecicpositionofahandshouldcauseatrigger,werecommendusingthe

Activation Zones feature, explained in section 3.3, page 8.

X001S[12:3]

X001S[13:2]

Set output interval of hand position information to 500mS

Set output format of Position tracking to separate commands for each parameter

6

Position example API message

Position example API message

Hand ID 1, position X= 50% of screen, Y=70% of screen,

size = 12% of screen, angle is 30 degrees.

Hand ID 1, position X= 50% of screen, Y=70% of screen,

size = 12% of screen, angle is 30 degrees.

Hand ID 2, position X= 35% of screen, Y=2% of screen,

size = 8% of screen, angle is 332 degrees.

X001B[1:050:070:012: 030] X001B[2:035:002:08:332]

X001B[ID=1]

X001B[X=050]

X001B[Y=070]

X001B[S=012]

X001B[A=030]

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

7

Request X and Y coordinates of the detected hand

Request the Size of the detected hand

Request the Angle of the detected hand

Request X, Y coordinates, Size and Angle of the detected hand

The currently detected Position can also be requested at any time by sending one of the following API command:

Position Data requests

WhenrequestingthepositionforaspecicHandID,theIDnumbercanbeaddedtothecommandwitha#.

The reply is identical to the triggered API messages such as the examples listed on the previous pages.

X001B[XY?]

X001B[SIZE?]

X001B[ANGLE?]

X001B[POSITION?]

X001B[POSITION?#2]

Example Position Data request

Request the Position of Hand ID 2

100

100

0

Y

X

left right

top

bottom

Camera perspective, as via HDMI output

Size = % of screen height

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

8

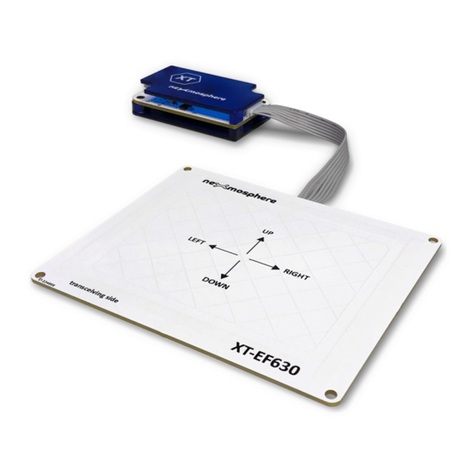

3.3 - Activation zones

TheXVHandGesturesensorhasuser-denablezoneswhichcanactivateatriggerwhenthedetectedhandentersthatzone.

There are 9 activation zones available of which each the position and size can be set. The API command to dene an

Activation Zone is:

X001B[ZONE*=XXX,YYY,WWW,HHH]

X001B[ZONE1=010,020,015,060]

X001B[ZONE2=075,020,015,060]

X001B[ZONE3=040,040,020,020]

Set position and size of Activation zone

Set Activation zone example command

Set Act. zone 1 to X= 10%, Y=20%, Width=15%, Height=60%

Set Act. zone 2 to X= 75%, Y=20%, Width=15%, Height=60%

Set Act. zone 3 to X= 40%, Y=40%, Width=20%, Height=20%

* = Activation Zone 1to 9 (nr)

XXX= X-coordinate 0to 100 (%)

YYY= Y-coordinate 0to 100 (%)

WWW= width 0to 100 (%)

HHH= height 0to 100 (%)

X001B[#:ZONE*:ENTER]

#=Hand ID 1or 2 *=Activation Zone 1to 9

X001B[#:ZONE*:EXIT]

#=Hand ID 1or 2 *=Activation Zone 1to 9

When a detected hand enters one of the Activation zones,

the following API message is send:

When a detected hand exits one of the Activation zones,

the following API message is send:

Activation zone example messages

Detected hand enters Activation zone 3

Detected hand exits Activation zone 9

X001B[1:ZONE3:ENTER]

X001B[1:ZONE9:EXIT]

When the HDMI output mode is set to DEBUG (see section 3.5, page 13), the Activation zones can be visualized on top of

the camera feed. In order to activate the visualization of the Activation zones or update them after the parameters have been

changed, the following API command must be send:

X001B[SENDZONES!]

Update the visualization of the Activation zones in Debug mode

100

1000

Camera perspective, as via HDMI output

Zone 1 Zone 2

Zone 3

15 % of screen width

Y= 20 %

of screen height

X=10 %

of screen width

60 % of screen height

20 % of screen height

15 % of screen width

20 % of screen width

60 % of screen height

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

9

Next to the size and position, several other parameters can be adjusted for each Activation zone:

The following control commands can be used to clear the Activation zone parameters. They will clear the size and position

values, and will set the delay, angle and size back to the default values.

X001B[ZONE*DELAY=TTTT]

X001B[ZONE*=CLEAR]

X001B[ZONE*ANGLE=AA1,AA2]

X001B[CLEARALLZONES]

X001B[ZONE*HANDSIZE=SS]

X001B[ZONE1DELAY=0500]

X001B[ZONE5ANGLE=150,210]

X001B[ZONE1HANDSIZE=20]

Set the time a hand needs to be in the Activation zone to trigger

ClearallparametersforaspecicActivationZone

Clear all paramers for all Activation Zones

Set the angle range in which a hand needs to be to cause a trigger

Set the minimum size which a hand needs to be to cause a trigger

Activation zone parameters example commands

Set the time a hand needs to be in Activation zone 1 to

cause a trigger to 500mS.

Set the angle range a hand needs to be in for Activation

zone 5 to 150-210

Set the minimum hand size for Activation zone 9 to 20

* = Activation Zone 1to 9 (nr)

TTTT= time 0to 5000 (mS) Default = 100mS

* = Activation Zone 1to 9 (nr)

* = Activation Zone 1to 9 (nr)

AA1= angle 1 0to 360 (°) Default = 0 °

AA2= angle 2 0to 360 (°) Default = 360 °

* = Activation Zone 1to 9 (nr)

SS= size 0to 80 (% of FoV height) Default =0%

When implementing Activation zones, consider the following:

• TheXandYcoordinatesaredenedin%ofthecamera'sFieldofView.Thetopleftofthescreenistheorigin(0,0).

• Thewidthandheightisdenedin%ofthecamera'sFieldofView.

• Theminimumhandsizeisdenedin%oftheheightofthecamera'sFieldofView.

• Per default, the Activation zone triggers when any hand enters. This can be adjusted to only trigger on detected gestures

(in the Activation zone) via a setting command. For more information please see page 21, setting 15.

• Per default, the reference point for the X and Y coordinate on the detected hand is the tip of the Index nger. This

reference point is used to determine whether a hand enters or exits an Activation Zone. The reference point can be

adjusted via a setting command. For more information please see page 21, setting 16.

• The hysteresis of the Activation Zones can be adjusted via a setting command. For more information please see page

21, setting 17.

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

10

The current Activation zone parameters can be requested at any time by sending he following API command:

The replies are identical to the commands used to set the parameters:

X001B[ZONES?]

Request all parameters for all Activationzones

X001B[ZONE*=XXX,YYY,WWW,HHH]

X001B[ZONE*DELAY=TTT]

X001B[ZONE*ANGLE=AA1,AA1]

X001B[ZONE*HANDSIZE=SS]

* = Activation Zone 1to 9 (nr)

XXX= X-coordinate 0to 100 (%)

YYY= Y-coordinate 0to 100 (%)

WWW= width 0to 100 (%)

HHH= height 0to 100 (%)

* = Activation Zone 1to 9 (nr)

TTTT= time 0to 5000 (mS)

* = Activation Zone 1to 9 (nr)

AA1= angle 1 0to 360 (°)

AA2= angle 2 0to 360 (°)

* = Activation Zone 1to 9 (nr)

SS= size 0to 80 (%)

Activation zones Data request

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

11

3.4 - Sensor conguration and Initialization

TheXVHandGesturesensorhasmultiplecongurationcommandsavailablewhichdeterminethebehaviourandfunctionality

of the AI engine running on the sensor. In order for these commands to be initialized, the following API command must be

send after oneormultiplecongurationcommandshavebesend:

Thefollowingcongurationcommandsareavailable:

Vice versa, it is also possible to place the sensor in an Idle state

Per default, the AI engine scans the entire FoV (Field of View) of the camera to detect a hand. This detection canvas can

bedecreasedandsettoaspecicareaoftheFoV.Asmallercanvaswillresultintofasterhanddetection.Thefeaturecan

beusedtoonlyfocusonspecicareasormimicopticalzoomwhencombinedwithahighercameraresolution.This API

command has the following format:

The camera feed can be rotated 180 degrees. This is useful in cases where it is preferable to install the camera in an

up-side-down position in which the cable protrudes from the top of the camera module instead of the bottom. This API

commandshas the following format:

Per default the camera records in a resolution of 640x480. The resolution in which the camera records, also determines

the resolution in which the HDMI connector outputs the video feed. This resolution can be adjusted to 1280x720 via an API

command. Please note that the higher the camera resolution, the slower the hand detection will be, as it takes more time for

the AI engine to analyse each frame. This API command has the following format:

For analysing dynamic gestures, the resolution must be kept at 480. When only using activation zones, the resolution can be

set to 720. In this case the response and FPS will be slower, but the resolution of the video output on the HDMI will be higher.

When adjusting the camera resolution, the resolution at which the AI engine analyses the video feed must be adjusted as well,

to the match the value of the camera resolution. This API command has the following format:

X001B[START!]

X001B[CAMROT=180]

X001B[CAMROT=000]

X001B[STOP!]

X001B[CANVAS=XXX,YYY,WWW,HHH]

X001B[CAMRES=RRRR]

X001B[ALGRES=RRRR]

(Re)startAIengine(withnewcongurationcommands)

Rotate the camera feed 180 degrees (cable on top)

Default camera feed orientation (cable on bottom)

Stop AI engine

Set the active detection canvas for the AI engine

Set the resolution of the camera capture and HDMI output

Set the resolution of the AI engine

XXX= X-coordinate 0to 100 (%) Default = 0

YYY= Y-coordinate 0to 100 (%) Default = 0

WWW= width 0to 100 (%) Default = 100

HHH= height 0to 100 (%) Default = 100

RRRR= resolution 480 or 720 Default = 480

RRRR= resolution 480 or 720 Default = 480

See page 7 for an explanation and visualization of the denition of the coordinates, width and height.

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

The gestures "Swipe", "Tap" and "Grab" (see page 3), are dynamic gestures for which the AI engine on the sensor needs

to analyse a sequence of video frames. The detection of these gestures is enabled by default, but can be disabled. The

advantage of disabling the detection of dynamic gestures when not needed, is that it will make the detection of other

gestures faster. The API commands to disable or enable dynamic gestures are:

X001B[DYNAMIC=ON]

X001B[DYNAMIC=OFF]

Per default, the sensor can detect 2 hands simultaneously. This can be adjusted to only detecting 1 hand at a time. This will

makethesensorlessexible,asitkeepstrackingthersthanditdetects.However,itwillmakethehanddetectionfaster.

The corresponding API command have the following format:

X001B[NRHANDS=1]

X001B[NRHANDS=2]

Set AI engine to only detect 1 hand at a time

Enable dynamic gestures (default)

Set the AI engine to detect 2 hands simultaneously

Disable dynamic gestures

Request current active canvas

Request the numnber of hands the sensors can detect simultanbeously

SeveralDatarequestareavailableregardingthesensor'sconguration:

Conguration Data requests

X001B[CANVAS?]

X001B[NRHANDS?]

The replies are identical to the commands used to set the parameters.

12

Set the minimum size for a hand to be detected

SSS= size 0to 100 (%) Default =10

X001B[MINSIZE=SSS]

Per default, the sensor will only detect hands with a minimum size of 10% of the height of the sensor's Field of View. De facto,

this determines the maximum distance at which a hand is detected (larger size = closer to sensor). This threshold can be

adjusted with the following API command:

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

13

3.5 - HDMI output

The mini-HDMI connector on the XV HandGesture sensor can provide a real time video output of the camera feed. This

output can be set to multiple modes. In order for these modes to be initialized the following command must be send after a

new HDMI command has been send:

For modes with a background image (Icon, Blank and Skeleton), the background image can be customized via a USB-update

procedure. This procedure is explained on page 15.

The hand icon image used in Icon mode can be customized via a USB-update procedure. This procedure is explained on

page 15.

X001B[HDMI=DEBUG]

X001B[HDMI=FULL]

X001B[HDMI=CAMERA]

X001B[HDMI=SKELETON:*]

X001B[HDMI=ICON]

X001B[HDMI=BLANK]

Set the HDMI output to Debug mode.

Shows camera feed with additional debug information:

Hand skeleton, tracking information, gesture detection, performance information

Set the HDMI output to Full mode (Default mode).

Shows camera feed with gesture detection information and white skeleton

Set the HDMI output to Camera mode.

Shows camera feed only

Set the HDMI output to Skeleton mode.

Only shows real-time hand skeleton, no camera feed,

customizable background image

Set the HDMI output to Icon mode.

Shows a (customizable) hand icon on the real-time position

of the detected hand, no camera feed, customizable background image

Set the HDMI output to Blank mode.

Only shows customizable background image

* = Color scheme for Skeleton 1to 9 (nr)

1= white

2 = grey

3 = black

4= red

5 = blue

6 = green

7= yellow

8 = orange

9 = purple

0= rainbow

X001B[START!]

(Re)startAIengine(withnewcongurationcommands)

The following HDMI-output commands are available:

The HDMI output mode can be requested at any time by sending he following API command:

The reply will be identical to the command used to set the HDMI mode (listed above).

X001B[HDMI?]

Request current HDMI mode

HDMI output Data request

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

14

3.6 - Focus mode

During installation, the sensor can be set to a special Focus mode. This mode helps to optimize the focus of the camera lens

to the person in front of the sensor. In order to start Focus mode, the following API commands must be sent to the sensor:

When the sensor is running in Focus Mode, the HDMI output will show the live camera feed with the following information in

the top left corner:

Focus = XXX

Best = XXX

The "Focus" value indicates the current focus level (higher is better) and the "Best" value indicates the highest focus level

detected until then. The lens of the camera module can be rotated to adjust its focus point. In order to optimize focus, we

recommend the following procedure:

The "Focus" and "Best" value are also provided via the following API message:

X001B[STARTFOCUS!]

Start Focus mode

1. Make sure a person stands in front of the sensor, at the distance where most people will stand when interacting

2. Ask the person to make a hand gesture that is used in the application, for example Point or Thumbs up.

The person should try to stand as still as possible

3. Preferably, the background (what is happening behind the person) is as consistent as possible as well.

4. Start Focus mode by sending the start command listed above.

5. Rotate the camera lens to the left. If the Best value increases, keep rotating until it stops increasing and the "Focus"

value (almost) matches the "Best" value. In case the Best value does not increase, but instead the "Focus" value

decreases, go to step 6.

6. Rotate the camera lens to the right. If the Best value increases, keep rotating until it stops increasing and the

"Focus" value (almost) matches the "Best" value.

Whenthefocusprocedureisnished,thefollowingcommandmustbesenttoplacethesensorbackinnormaloperation

mode:

X001B[FOCUS:FFFF,BBBB] FFFF= Current focus value 000 to 1000

BBBB= Best focus value 000 to 1000

X001B[START!]

(Re)startAIengine(withnewcongurationcommands)

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

15

The background image can be customized by following the procedure below:

1. Create your custom background image in the following 2 resolutions, 640x480, 1280x720

2. Savethemasa.jpgor.pngwiththefollowing(exact)lenames:

Background-480.jpg or .png

Background-720.jpg or .png

3. StorethelesonaUSBstick

4. Unplug USB-C power supply, and insert the USB stick in the USB-A connector

5. Repower the sensor

6. The sensor will automatically update the background images with the customized images on the USB stick

7. The status of the upload procedure is indicated on your screen via the HDMI output connector, or alternatively, you

will also receive the following API command:

8. Afteruploadinghasnished,thesensorwillshutdown.UnplugtheUSBstickandrepoweredthesensor.

The hand icon image can be customized by following the procedure below:

1. Create your custom hand icon image in the resolution 400x400,

and place it in a 460x460 canvas, resulting in a 30px transparent border.

2. Saveitasa.pngwiththefollowing(exact)lename:Hand.png

3. StoretheleonaUSBstick

4. Unplug USB-C power supply, and insert the USB stick in the USB-A connector

5. Repower the sensor

6. The sensor will automatically update the hand icon image with the customized image on the USB stick

7. The status of the upload procedure is indicated on your screen via the HDMI output connector, or alternatively, you

will also receive the following API command:

8. Afteruploadinghasnished,thesensorwillshutdown.UnplugtheUSBstickandrepoweredthesensor.

The background image can be customized by following the procedure below:

1. Create your custom start-up image in the following 2 resolutions, 640x480, 1280x720

2. Savethemasa.jpgor.pngwiththefollowing(exact)lenames:

Boot-480.jpg or .png

Boot-720.jpg or .png

3. Follow step 3 to 7 listed in the sections above.

The video which is shown when the sensor is in idle mode can be customized by following the procedure below:

1. Create your custom videoe in the following 2 resolutions, 640x480, 1280x720

2. Save them as a .mp4 (exported with an H.264 codec)

Idle-480.mp4

Idle-720.mp4

3. Follow step 3 to 7 listed in the sections above.

X001B[UPLOAD=SUCCES ]

X001B[UPLOAD=SUCCES ]

3.7 - USB updates

Thesensoroersseveraloptionstocustomizethelookandfeelofthevideooutputofthesensor.Thefollowingoptionsare

available:

- Update the hand icon image used in Icon mode

- Update the background image used in Icon, Skeleton and Blank mode

- Update the image during start-up of the sensor

- Update the video shown when the sensor is in idle state (AI engine not running)

XV

USB-A

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

16

Request the FPS which the AI engine currently analyses (higher = faster)

Request the sensor's current temperature

The sensor's performance and system health can be checked with the following Data requests:

X001B[FPS?]

X001B[REBOOT!]

X001B[TEMP?]

The reply will be as follows:

The reply will be as follows:

X001B[FPS=FF]

X001B[TEMP=TT°C ]

FF=FPS 00 to 99 (Frames per Second)

TT=Temperatue 00 to 99 (degrees Celsius)

3.8 - Monitoring and control

Thesensoroersseveralfeaturestomonitorthestatus,performanceandsystemhealthofthesensor.ThefollowingAPI

messages are send automatically:

When the 5V USB-C power supply is connected to the sensor, it will automatically start. It will take approximately 60 seconds

for the sensor get into operational mode. During operation, the sensor can be rebooted via the following API command:

After a reboot command, the sensor will restart into Idle mode. In order to set the sensor into operational mode, a START!

command must be send when the sensor is ready rebooting (see page 11).

Indicates when the sensor is ready for operation after a power cycle

Warning message indicating that the sensor's temperature is high (65°C)

In this case, it is advisable to add additional airow to the sensor installation

Warning message indicating that the sensor's temperature is critical (75°C)

Immediate action required to reduce the sensor's temperature

Info message indicating that the sensor's temperature is back at an OK level

This message is only send in case the temperature has been critical or high

Indicates that the USB upload procedure has started

Indicates that the USB upload procedure has been completed successfully

Indicates that the USB upload procedure has failed

Error message indicating that no camera is connected

Reboots the AI engine processors

X001B[INFO=SENSOR READY ]

X001B[ERROR=NO CAMERA FOUND]

X001B[WARNING=TEMP IS HIGH]

X001B[WARNING=TEMP IS CRITICAL]

X001B[INFO=TEMP IS OK]

X001B[UPLOAD=STARTED]

X001B[UPLOAD=SUCCES

X001B[UPLOAD=STARTED]

Monitoring Data request

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

17

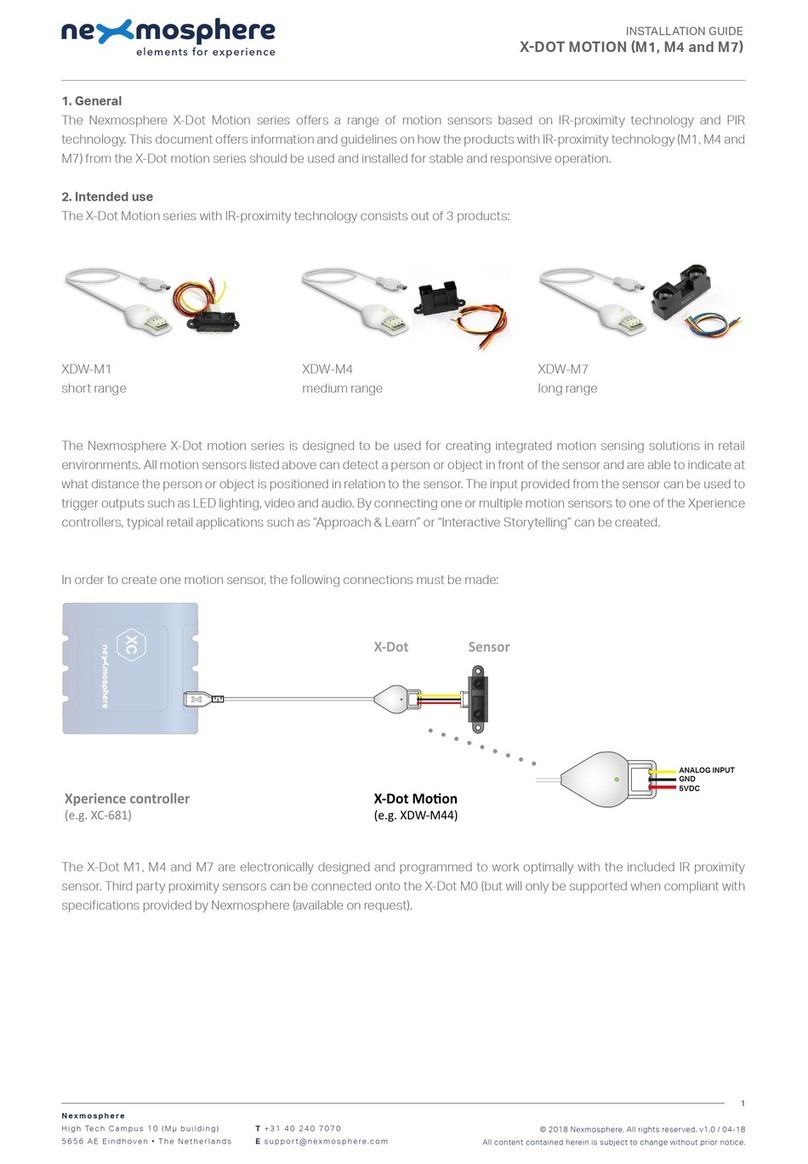

When integrating an XV HandGesture sensor into your digital signage installation, several installation requirements and

guidelines need to be taken into account in order for the sensor to perform optimal and operate stable.

The XV HandGestuer sensor can be connected to any X-talk interface and is therefore compatible with all Xperience

controllers. Make sure the sensor is powered and connected to the X-talk interface before powering the Xperience controller.

Otherwise, the sensor will not be recognized by the Xperience controller and no sensor output will be provided.

When integrating an XV HandGesture sensor into your digital signage installation, several installation requirements and

guidelines need to be taken into account in order for the sensor to perform optimal and operate stable.

The sensor is a 15watt device which dispenses heat through its full aluminium body. When installing the XV-H40 module,

pleasemakesuretheenvironmenthasa(mild)airow,allowingthedevicetostaybelowtheabsolutemaximumoperating

temperate of 75°C.Installingthesensorinaverticalpositionwillhelptocreateairow.

Example connection to XC Controller Example connection to XN Controller

DC Power supply

USB-C

Power supply

XC

XV

HDMI

XN

USB-C

Power supply

XV

HDMI

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

18

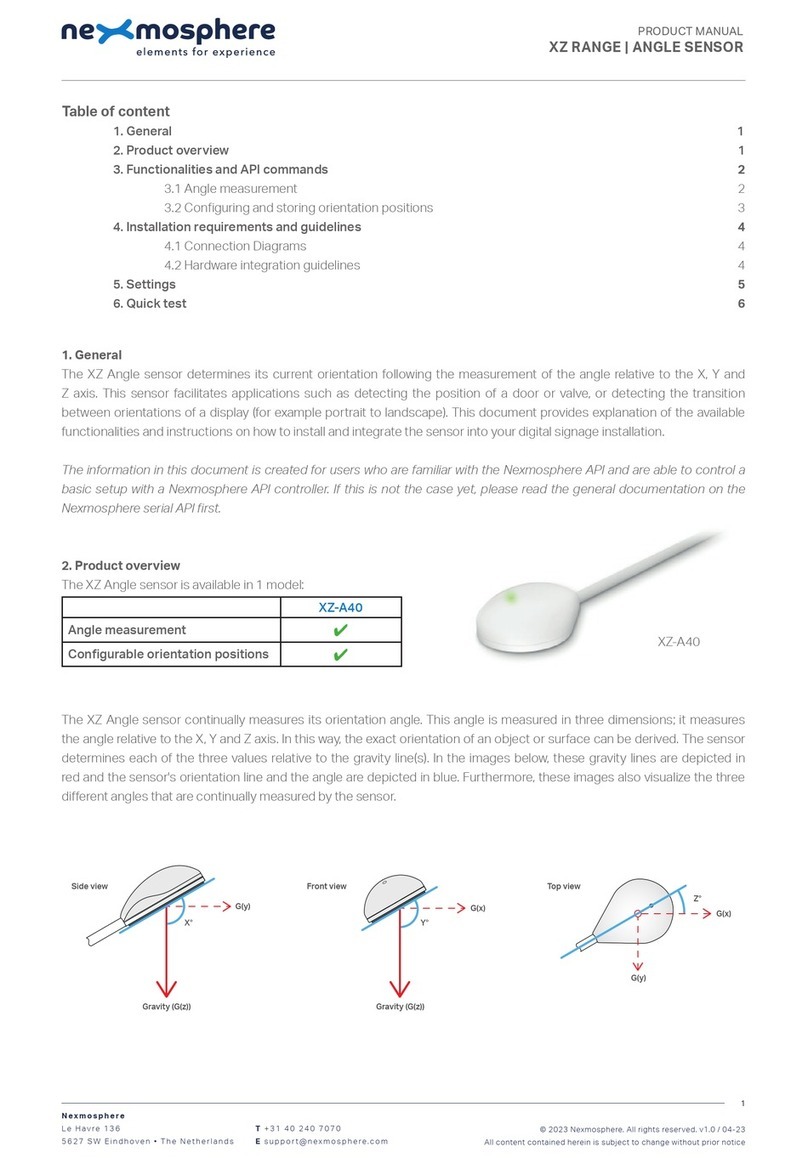

When installing the camera module, we recommend to take the following guidelines into account:

Horizontal positioning

Install the camera centered with the location of where the

person interacting with the sensor will be standing. If this

is not possible, we recommend installing the sensor as

close as possible to the center, on the right hand side of

the person standing in front of the sensor

Vertical positioning

The sensor has a 90 degrees Field of View. We recommend

to install the sensor at a height in which a slightly raised

hand of the person in front of the sensor will cause the

hand to enter the camera's eld of view. Depending on

the distance of the person in front of the display, typically

the recommended installation height is between 1.3 - 1.7

meters.

For the horizontal and vertical positioning of the camera, we recommend to always test with the HDMI feed and check

if a person's hand is well within the sensor's eld of view (also when making dynamic gestures, such as swipes).

Light conditions

The sensor works optimally in even light conditions,

meaning that there is no strong dark/light contrast in the

sensor's Field of View. Especially strong light sources

pointed directly towards the camera, such as a light bulb

or sunshine through a window, can decrease the quality

of the video feed and thereby the accuracy of the hand

gesture detection.

Installation behind glass

The sensor works through glass or any clear transparent

material through which there is full vision. When installing

the camera behind glass, we recommend to install the lens

as close as possible to the glass panel. This avoids light

reectionsinthecamera'seldofview.

Camera orientation

The sensor can be installed in 2 orientations:

• Default orientation: DisplayPort connector on bottom

• 180° rotated: DisplayPort connector on top

When installing the sensor in the 180 degrees orientation,

please make sure to send the corresponding API

commands for the sensor setup (see page 11).

1.3m - 1.7m

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

19

Handgesturescanbeinterpretedandexecutedinmanydierentways.AlthoughtheAIengineistrainedtodetectavariety

of executions, the sensor will perform best when the following guidelines are taken into account when a gesture is executed.

Open palm

The"Openpalm"gestureisdetectedwhenall5ngersare

stretched, resulting in the hand being "open".

Point

The"Pointing"gestureisdetectedwhenthe indexnger

isstretchedandtheother4ngersarefolded.Incasethe

thumb is stretched as well, this will still be recognised as a

"Pointing" gesture.

OK

The "OK" gesture is detected when the tip of the index

ngerandthumbaretouching(resultinginaloop)andthe

other3ngersarestretched.

Thumb

The "Thumb" gesture is detected when the thumb is

stretchedandtheother4ngersarefolded.

Fist

The "Fist" gesture is detected when all 5 ngers are

completely folded.

V

The"V"gestureisdetectedwhenteindexngerandmiddle

ngerarestretchedandtheother3ngersarefolded.

© 2023 Nexmosphere. All rights reserved. v1.0 / 03-23

All content contained herein is subject to change without prior notice

Nexmosphere

Le Havre 136

5627 SW Eindhoven • The Netherlands

T+31 40 240 7070

Esupport@nexmosphere.com

20

The following gestures are dynamic gestures, meaning that they will be recognized when a sequence of hand movements are

executed.Asaresult,dynamicgestureswilltypicallyhaveahighernon-detectionrateintheeld,asthesequenceofhand

movementscanbeexecuteddierently,dependingontheinterpretationoftheuser.

Swipe

A "Swipe" gesture is detected when a person's hand

moves from left to right in a smooth motion. The following

will make it easier for the sensor to recognize the swipe

gesture:

- Raise the hand at torso level, not higher or lower.

- Keep the hand in front of the torso or arm, don't spread

the arms to the side.

- Instead of making the movement with the entire arm,

make the swipe movement from the wrist or elbow.

- Within your swiping movement, start with the palm of

the hand (slightly) directed towards the camera, and end

with the back of the hand (slightly) directed towards the

camera. Or vice versa.

- Make sure the hand always remains completely in the

Field of View of the sensor

- Perform the swipe gesture at a natural speed. A

slower swipe will not be recognized easier than a faster

movement. If any, a slightly swifter swipe is easier for the

sensor to recognize.

Tap

A "Tap" gesture is detected when a person's hand is in

"Point" position (see previous page) and then swiftly

movestheindexngertothefrontandback(asiftapping

in the air). The following will make it easier for the sensor to

recognize the swipe gesture:

- Make sure the palm of the hand is directed towards

the camera. A tap gesture is not recognised from a side

position.

- A tap gesture in the center of the sensor's Field of View

is easier recognised then a tap gesture on the outer areas.

Grab

A "Grab" gesture is detected when a person's hand is in

"Open Palm" position (see previous page) and then swiftly

moves to "Fist" position and back to "Open Palm" position.

The following will make it easier for the sensor to recognize

the swipe gesture:

- Make sure movement sequence "Open palm - Fist - Open

palm" is executed swiftly. When performed to slow, the

gestures not be recognized as a "Grab", but instead as 3

separate gestures (Open Palm, Fist, Open Palm).

This manual suits for next models

1

Table of contents

Other Nexmosphere Accessories manuals

Nexmosphere

Nexmosphere XZ Series User manual

Nexmosphere

Nexmosphere XT-EF Series User manual

Nexmosphere

Nexmosphere X-Dot Motion Series User manual

Nexmosphere

Nexmosphere XF-P3W User manual

Nexmosphere

Nexmosphere XZ-A40 User manual

Nexmosphere

Nexmosphere XS Series User manual

Nexmosphere

Nexmosphere X-EYE User manual

Nexmosphere

Nexmosphere XD Series User manual

Nexmosphere

Nexmosphere X-EYE XY-510 User manual

Nexmosphere

Nexmosphere X-EYE XY-116 User manual