## Conv1D based model

model = tf.keras.models.Sequential([

tf.keras.layers.Conv1D(filters=16, kernel_size=3, activation='relu',

input_shape=(TIMESERIES_LEN, 3)),

tf.keras.layers.Conv1D(filters=8, kernel_size=3, activation='relu'),

tf.keras.layers.Dropout(0.5),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(4, activation='softmax')

])

_________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv1d (Conv1D) (None, 48, 16) 160

conv1d_1 (Conv1D) (None, 46, 8) 392

dropout (Dropout) (None, 46, 8) 0

flatten (Flatten) (None, 368) 0

dense (Dense) (None, 64) 23616

dense_1 (Dense) (None, 4) 260

=================================================================

Total params: 24,428

Trainable params: 24,428

Non-trainable params: 0

As described in the above code block table, 24,428 trainable parameters define the AI-car sensing node network

model. These parameters are related to the LSTM RNN topology chosen.

2.4.2 Model training

The AI-car sensing node network training has been performed by acquiring datasets of acceleration variations in

the time domain:

•over a three-axis reference system (Δax, Δay, Δaz)

• with a frequency equal to 10 Hz

• a sample time of 100 msec

Each dataset targets a specific car state: parking, normal driving, bumpy driving or skidding.

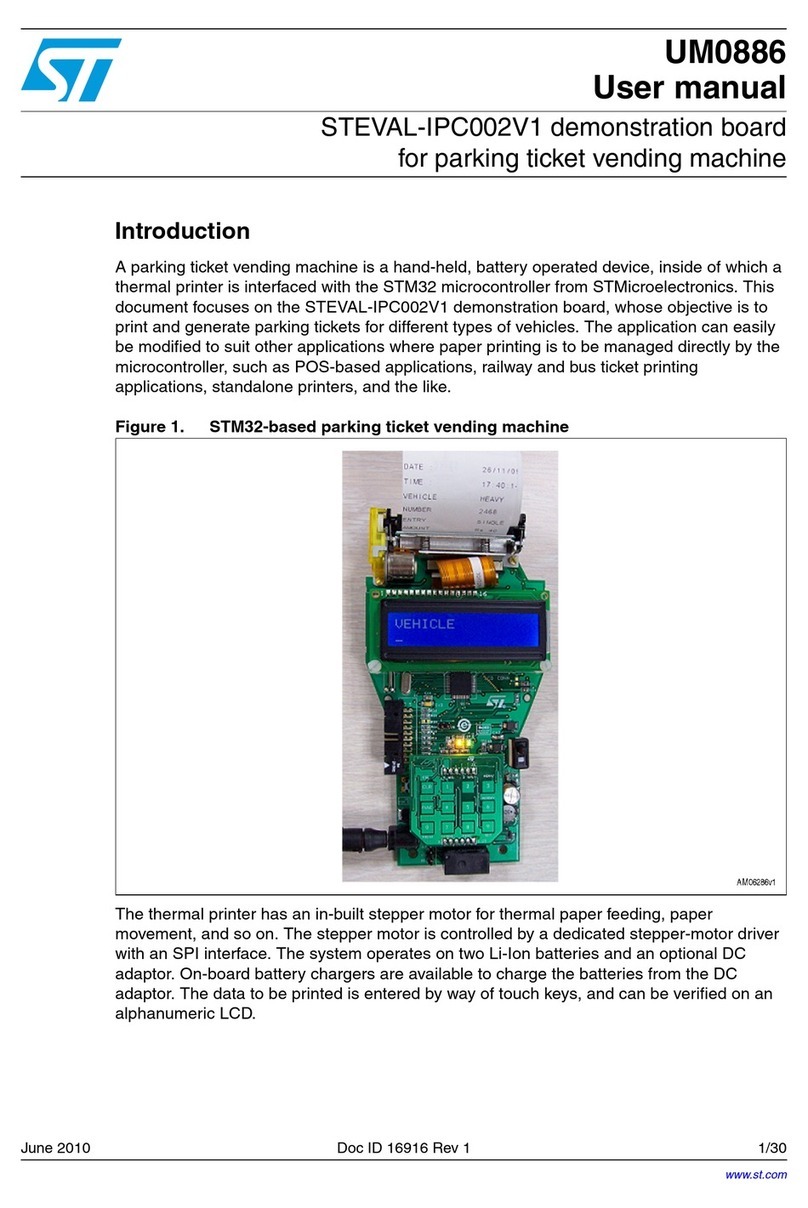

The use of acceleration variations instead of raw acceleration data avoids misinterpretations of the car state. If

the car is parked on a road with a negative (or positive) slope (as shown in the figure below), the IMU registers

a nonzero X-axis acceleration that would not lead to the parking state. Instead, if we consider an acceleration

variation in the time domain, the result would be 0, leading to the correct parking state.

UM3053

AI-car sensing node life cycle

UM3053 - Rev 1 page 7/39